Microsoft tests revolutionary cooling technology for artificial intelligence chips

The chips used in data centers to run the latest advances in artificial intelligence generate significantly more heat than previous generations of silicon. Anyone who's ever had an overheating phone or laptop knows that electronics don't like heat. With the increased demand for AI and the emergence of new chip designs, current cooling technology could limit progress within a few years.

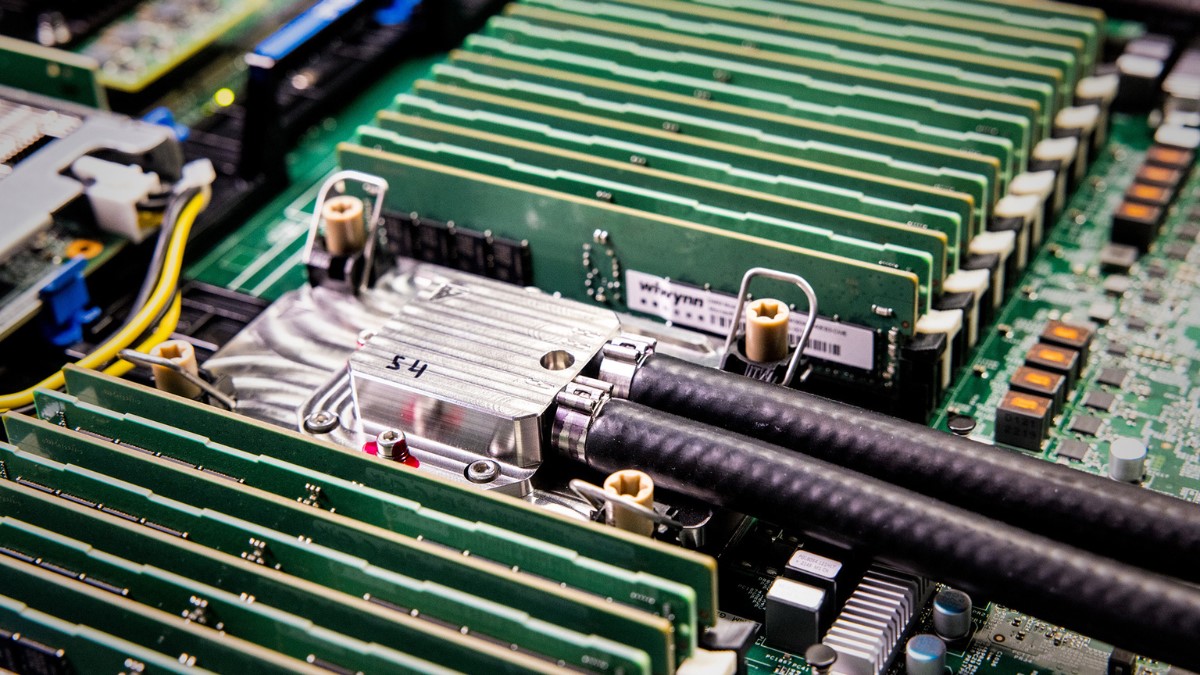

To help address this issue, Microsoft successfully tested a new cooling system that removes heat up to three times better than cold plates, an advanced cooling technology widely used today. It's microfluidics, a method that directs coolant directly into the silicon—where the heat resides. Tiny channels are etched directly into the back of the silicon chip, forming grooves that allow the coolant to flow directly over the chip and remove heat much more efficiently. The team also used AI to identify specific heat-sensitive areas on the chip and direct the coolant more precisely.

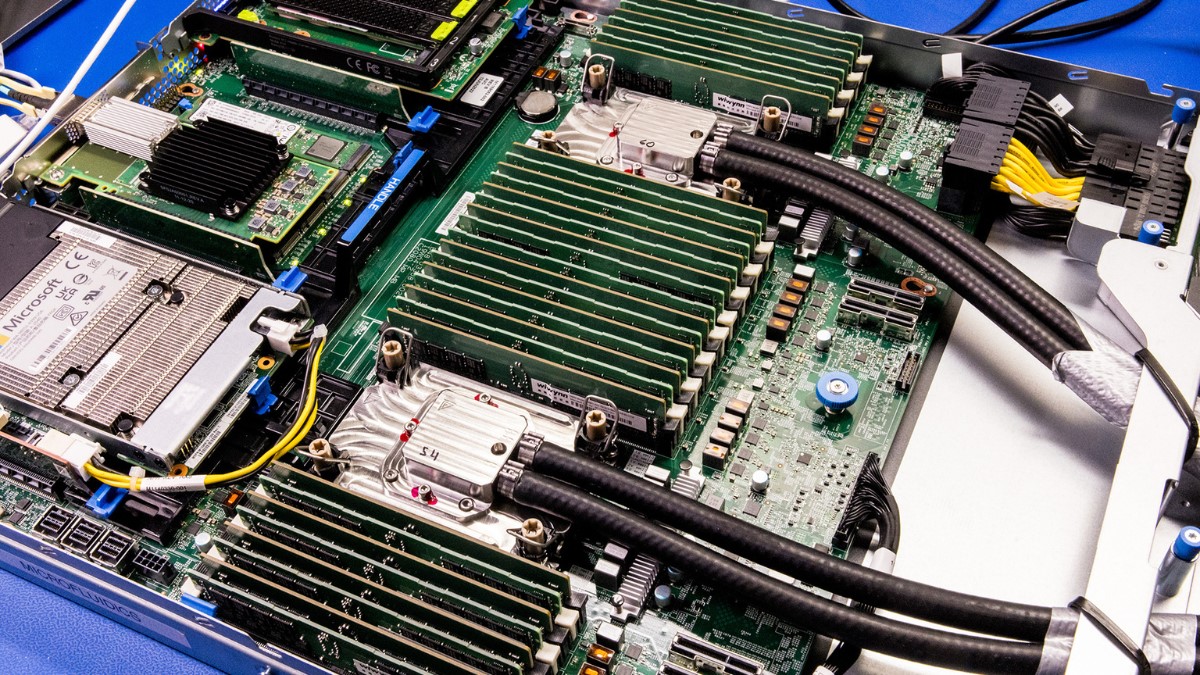

Researchers say microfluidics could increase the efficiency and improve the sustainability of next-generation AI chips. Currently, most GPUs used in data centers are cooled with cold plates, which are separated from the heat source by several layers that limit the amount of heat they can remove.

With each new generation of AI chips, power increases—and so does heat. In about five years, "if you're still relying heavily on traditional cold-plate technology, you're going to be limited," says Sashi Majety, senior technical program manager for Cloud Operations and Innovation at Microsoft.

Microsoft announced that it has successfully developed an on-chip microfluidic cooling system capable of cooling a server running core services during a Teams meeting simulation.

"Microfluidics would enable designs with higher power density, more customer-demanding features, and better performance in a smaller footprint," explains Judy Priest, corporate vice president and chief technical officer of Cloud Operations and Innovation at Microsoft. "But we needed to prove that the technology and design worked, and then the next step was to test reliability."

The company's lab tests have shown that microfluidics removes up to three times more heat than cold plates, depending on the workload and configuration. The technology also reduced the maximum silicon temperature rise inside a GPU by up to 65%, although this varies by chip type. The team expects advanced cooling to also improve the energy efficiency ratio—a key indicator of data center energy efficiency—and reduce operating costs.

Using AI to Mimic Nature

Microfluidics isn't a new concept, but making it work is a challenge for the entire industry. "Systems thinking is crucial when developing a technology like microfluidics. You need to understand the system interactions between silicon, coolant, server, and data center to extract maximum benefit," says Husam Alissa, chief technology officer for systems in Microsoft's Cloud Operations and Innovation division.

Just executing the grooves correctly is a challenge. The dimensions of the microchannels are similar to the thickness of a human hair, which leaves no margin for error. As part of the prototype, Microsoft collaborated with the Swiss startup Corintis to use AI to optimize a bioinspired design capable of cooling hot spots on the chip more efficiently than straight channels, which were also tested. The bioinspired design resembles the grooves on a leaf or a butterfly's wing – nature is adept at finding the most efficient paths to distribute what's needed.

Microfluidics requires more than innovative channel design. It's a complex engineering challenge. They had to ensure the channels were deep enough to circulate coolant without clogging, but not so deep that they weakened the silicon and increased the risk of breakage. In the last year alone, the team has produced four design iterations.

It also required developing leak-proof packaging for the chip, finding the best coolant formula, testing different etching methods, and creating a step-by-step process for adding etching to the chip manufacturing process.

This innovation is just one example of how Microsoft is investing and innovating in infrastructure to meet the demand for AI services and capabilities. For example, the company plans to invest more than US$1.4 billion in capital expenditures this quarter.

These investments include the development of own family of Cobalt and Maia chips, specifically designed to run Microsoft and customer workloads more efficiently. Since the launch of the Cobalt 100 chip, Microsoft and customers have benefited from its efficient computing power, scalability, and performance.

Even so, chips are only part of the puzzle. Silicon operates within a complex system of boards, racks, and servers in a data center. Microsoft's systems approach involves tuning each part of this system to work in harmony and maximize performance and efficiency. A key step is developing cutting-edge cooling techniques, such as microfluidics.

The next step is to investigate how microfluidic cooling can be incorporated into future generations of Microsoft's own chips. The company will also continue working with manufacturing and silicon partners to bring microfluidics into production in its data centers.

"Hardware is the foundation of our services," says Jim Kleewein, technical fellow on the Microsoft 365 Core Management team. "We all have a stake in that foundation—its reliability, cost-effectiveness, speed, consistent behavior, and sustainability, to name a few. Microfluidics improves each of these: cost, reliability, speed, consistent behavior, and sustainability."

Advantages of microfluidics

A simple Microsoft Teams call, for example, illustrates the advantages that microfluidic cooling can offer. Teams isn't a single service, but a suite of around 300 different services that work seamlessly. One connects the user to the meeting, another hosts the meeting, another stores the chat, another merges audio streams to ensure everyone is heard, another records, and another transcribes.

"Each service has different characteristics and places different loads on the server," explains Kleewein. "The more the server is used, the more heat it generates, which makes sense."

For example, most Teams calls start on the hour or half-hour. The call controller is very busy from five minutes before to three minutes after these times, and is mostly idle the rest of the time. There are two ways to deal with peak demand: install a lot of expensive extra capacity, which sits idle most of the time, or run the servers to their limit, known as overclocking. Since overclocking makes the chips even hotter, overclocking can be avoided, as it could damage the components.

"Whenever we have peak workloads, we want to be able to overclock. Microfluidics would allow this without fear of melting the chip, as it's a much more efficient cooling system," says Kleewein. "There are advantages in cost and reliability. And also in speed, since we can overclock."

How cooling fits into the bigger picture

Microfluidics is part of a larger Microsoft initiative to advance cutting-edge cooling techniques and optimize every stage of the cloud. Traditionally, data centers are cooled with air blown by large fans, but liquids conduct heat much better than air.

One of the ways to liquid cooling that Microsoft already uses in their data centers are the cold plates. These plates sit on top of the chips, with cold liquid circulating in internal channels to absorb heat from the chips and then reheating to be cooled again.

Chips are packed with layers of materials to help dissipate heat from hot spots and protect them. But these layers also act as blankets, limiting the performance of cold plates by trapping heat and preventing it from reaching the chip. Future generations of AI chips are expected to be even more powerful—and too hot to be cooled with cold plates alone.

Cooling chips directly through microfluidic channels is much more efficient—not only for removing heat, but also for the overall system's performance. With fewer insulation layers and the coolant directly touching the hot silicon, the coolant doesn't need to be as cold to be efficient. This saves energy, as it won't require as much cooling, and it also performs the job better than current cold plates. Microfluidics also allows for better utilization of waste heat.

Microsoft is also looking to optimize data center operations through software and other approaches. "If microfluidic cooling uses less energy in data centers, there will be less pressure on the power grids of nearby communities," says Ricardo Bianchini, technical fellow and corporate vice president at Azure, a computing efficiency specialist.

Heat also imposes limits on data center design. One of the benefits of a data center is that servers are physically close together. Distance increases latency, or the communication time between servers. But today, servers can only be clustered so closely together before heat becomes a problem. Microfluidics would allow for increased server density, meaning data centers could increase their computing capacity without the need for new buildings.

The future of chip innovation

Microfluidics also has the potential to pave the way for new chip architectures, such as 3D chips. Just as clustering servers reduces latency, stacking chips reduces it even further. This type of architecture is challenging because it generates a lot of heat.

However, microfluidics places the coolant extremely close to where the power is consumed, so "we can circulate the coolant within the chip," as would be the case in 3D designs, Bianchini explains. This would require a different microfluidic design, with cylindrical pins between the stacked chips, similar to pillars in a multi-story parking garage, with the coolant flowing around them.

“Whenever we can do things more efficiently and simply, we open up the opportunity for new innovations and chip architectures,” Priest highlights.

Eliminating the heat limit could allow for more chips per rack in the data center or more cores on a chip, increasing speed and enabling smaller, more powerful data centers.

By demonstrating how new cooling techniques like microfluidics can work, Microsoft hopes to pave the way for more efficient and sustainable next-generation chips across the industry.

"We want microfluidics to become mainstream, not just something we do," says Kleewein. "The more people adopt it, the better and faster the technology will evolve—and that's good for us, for our customers, for everyone."

About Microsoft

Microsoft (Nasdaq “MSFT” @microsoft) creates AI-powered platforms and tools to deliver innovative solutions that meet customers' evolving needs. The technology company is committed to making AI widely available and responsibly, with a mission to empower every person and every organization on the planet to achieve more. Having operated in Brazil for 36 years, the company launched the Microsoft Mais Brasil Plan in 2020 and has since significantly expanded its investments in cloud infrastructure and artificial intelligence, in addition to leading initiatives such as the ConectAI program, which aims to train 5 million Brazilians in AI skills by 2027.

Check out the latest Microsoft news on Source Latam Brazil